Why in NEWS

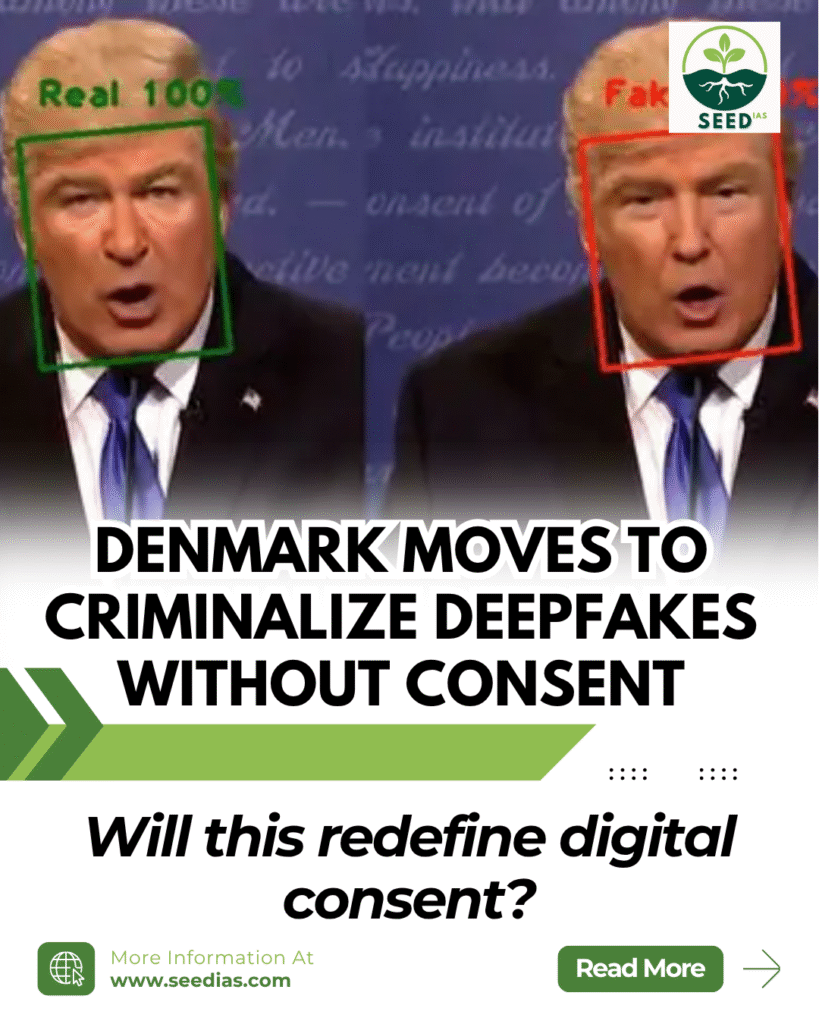

Denmark has introduced a first-of-its-kind copyright amendment to ban the unauthorized sharing of deepfakes, treating realistic synthetic media as copyright violations and extending digital likeness protection for 50 years posthumously.

Key Terms and Concepts

| Term | Explanation |

|---|---|

| Deepfakes | AI-generated synthetic media that falsely depicts someone saying or doing things they never did. |

| GANs (Generative Adversarial Networks) | AI technique using two neural networks to generate and refine fake content. |

| Voice Cloning | Use of NLP to replicate someone’s voice and speech patterns. |

| Safe Harbour | Legal immunity for platforms from content liability, conditional on compliance. |

Denmark’s Proposed Deepfake Law

| Feature | Details |

|---|---|

| Ban on Sharing Without Consent | Criminalizes the unauthorized use of someone’s face, voice, or likeness. |

| Posthumous Protection | Individuals’ digital likeness protected for 50 years after death. |

| Platform Liability | Requires quick removal of flagged deepfakes or face legal penalties. |

How Deepfakes Work

| Type | Description |

|---|---|

| Face Swap | Replaces a person’s face with another in a video or image. |

| Voice Clone | Uses AI to mimic someone’s voice. |

| Source Manipulation | Alters original footage to change actions or speech. |

Detection Techniques

- Signs: Unnatural blinking, lighting mismatches, lip-sync errors

- Tools: Microsoft Video Authenticator, Sensity AI, Deepware, etc.

- Platforms are deploying detection software and warning labels.

India’s Legal Framework on Deepfakes

| Law/Provision | Application |

|---|---|

| IT Act (Sections 66D, 67, 67A/B) | Covers impersonation, obscenity, and transmission of explicit deepfakes. |

| IT Rules, 2021 | Mandate prompt takedowns of fake content or platforms lose safe harbour status. |

| Copyright Act, 1957 | Protects against unauthorized use of copyrighted likeness or media. |

| CERT-In & I4C | Issue advisories and assist in deepfake-related cybercrime response. |

Judicial Interventions in India

| Case | Significance |

|---|---|

| Anil Kapoor v. Anonymous (2023) | Delhi HC recognized rights over voice, face, and signature dialogues. |

| Rajnikanth v. Varsha Productions (2015) | Madras HC upheld celebrity personality rights over name, image, and caricature. |

In a Nutshell

Mnemonic: D.E.E.P.F.A.K.E.

Denmark leads in deepfake regulation

Empowers posthumous rights

Enforces consent-based sharing

Platforms made accountable

Facial and voice rights protected

AI misuse addressed

Kapoor and Rajnikanth cases show precedent

Enact strict frameworks globally

Prelims Practice Questions

1. What is the primary AI technique behind the creation of deepfakes?

A. Decision Trees

B. Generative Adversarial Networks (GANs)

C. Linear Regression

D. Convolutional Neural Networks

2. Under which Indian law can sexually explicit deepfake content be prosecuted?

A. Indian Penal Code, 1860

B. Information Technology Act, 2000 – Section 67A

C. Right to Privacy Act

D. Press and Registration Act, 1867

3. Which of the following best describes the “Safe Harbour” clause?

A. A platform’s guarantee of data privacy

B. Legal immunity for platforms from liability for user-generated content

C. A mechanism to protect whistleblowers

D. A copyright provision to protect public domain content

Mains Practice Questions

1. With AI-generated deepfakes becoming increasingly convincing, discuss the need for a dedicated legal framework to address deepfake misuse in India. 10 Marks (GS2 – Governance, Cybersecurity)

2. Examine how deepfake technology impacts individual privacy and democratic institutions. How can India balance innovation and regulation in this domain? 10 Marks (GS3 – Technology, Ethics)

Prelims Answers and Explanations

| Qn | Answer | Explanation |

|---|---|---|

| 1 | B | GANs are the core technology enabling realistic deepfake generation. |

| 2 | B | Section 67A of the IT Act penalizes publishing sexually explicit material. |

| 3 | B | Safe Harbour offers platforms legal immunity unless they fail to comply with takedown rules. |